Working With Files – Getting Started With Wherobots Cloud Part 3

This is the third post in a series that will introduce Wherobots Cloud and WherobotsDB, covering how to get started with cloud-native geospatial analytics at scale. Part 1: Wherobots Cloud Overview Part 2: The Wherobots Notebook Environment Part 3: Working With Files (this post) In the previous post in this series we saw how to […]

TABLE OF CONTENTS

Contributors

-

William Lyon

Developer Relations At Wherobots

This is the third post in a series that will introduce Wherobots Cloud and WherobotsDB, covering how to get started with cloud-native geospatial analytics at scale.

- Part 1: Wherobots Cloud Overview

- Part 2: The Wherobots Notebook Environment

- Part 3: Working With Files (this post)

In the previous post in this series we saw how to access and query data using Spatial SQL in Wherobots Cloud via the Wherobots Open Data Catalog. In this post we’re going to take a look at loading and working with our own data in Wherobots Cloud as well as creating and saving data as the result of our analysis, such as the end result of a data pipeline. We will cover importing files in various formats including CSV, GeoJSON, Shapefile, and GeoTIFF in WherobotsDB, working with AWS S3 cloud object storage, and creating GeoParquet files using Apache Sedona.

If you’d like to follow along you can create a free Wherobots Cloud account at cloud.wherobots.com.

Loading A CSV File From A Public S3 Bucket

First, let’s explore loading a CSV file from a public AWS S3 bucket. In our SedonaContext object we’ll configure the anonymous S3 authentication provider for the bucket to ensure we can access this specific S3 bucket’s contents. Most access configuration will happen in the SedonaContext configuration object in the notebook, however we can also apply these settings when creating the notebook runtime by specifying additional spark configuration in the runtime configuration UI. See this page in the documentation for more examples of configuring cloud object storage access using the SedonaContext configuration object.

from sedona.spark import *

config = SedonaContext.builder(). \

config("spark.hadoop.fs.s3a.bucket.wherobots-examples.aws.credentials.provider",

"org.apache.hadoop.fs.s3a.AnonymousAWSCredentialsProvider"). \

getOrCreate()

sedona = SedonaContext.create(config)Access to private S3 buckets can be configured by either specifying access keys or for a more secure option using an IAM role trust policy.

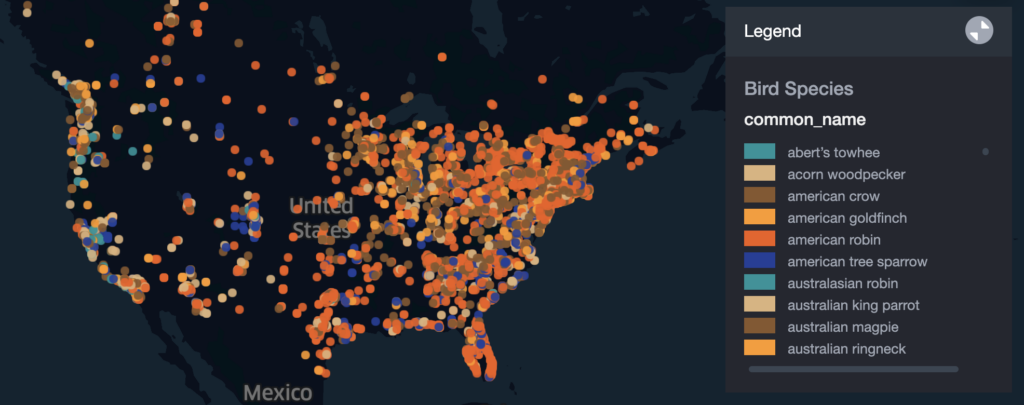

Now that we’ve configured the anonymous S3 credentials provider we can use the S3 URI to access objects within the bucket. In this case we’ll load a CSV file of bird species observations. We’ll use the ST_Point function to convert the individual longitude and latitude columns into a singe Point geometry column.

S3_CSV_URL = "s3://wherobots-examples/data/examples/birdbuddy_oct23.csv"

bb_df = sedona.read.format('csv'). \

option('header', 'true'). \

option('delimiter', ','). \

option('inferSchema', 'true'). \

load(S3_CSV_URL)

bb_df = bb_df.selectExpr(

'ST_Point(anonymized_longitude, anonymized_latitude) AS location',

'timestamp',

'common_name',

'scientific_name')

bb_df.createOrReplaceTempView('bb')

bb_df.show(truncate=False)

+----------------------------+-----------------------+-------------------------+----------------------+

|location |timestamp |common_name |scientific_name |

+----------------------------+-----------------------+-------------------------+----------------------+

|POINT (-118.59075 34.393112)|2023-10-01 00:00:02.415|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:02.415|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:04.544|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:04.544|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:05.474|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:05.474|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:05.487|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:05.487|california scrub jay |aphelocoma californica|

|POINT (-120.5542 43.804134) |2023-10-01 00:00:05.931|lesser goldfinch |spinus psaltria |

|POINT (-120.5542 43.804134) |2023-10-01 00:00:05.931|lesser goldfinch |spinus psaltria |

|POINT (-120.5542 43.804134) |2023-10-01 00:00:06.522|lesser goldfinch |spinus psaltria |

|POINT (-120.5542 43.804134) |2023-10-01 00:00:06.522|lesser goldfinch |spinus psaltria |

|POINT (-120.5542 43.804134) |2023-10-01 00:00:09.113|lesser goldfinch |spinus psaltria |

|POINT (-120.5542 43.804134) |2023-10-01 00:00:09.113|lesser goldfinch |spinus psaltria |

|POINT (-118.59075 34.393112)|2023-10-01 00:00:09.434|california scrub jay |aphelocoma californica|

|POINT (-118.59075 34.393112)|2023-10-01 00:00:09.434|california scrub jay |aphelocoma californica|

|POINT (-122.8521 46.864) |2023-10-01 00:00:17.488|red winged blackbird |agelaius phoeniceus |

|POINT (-122.8521 46.864) |2023-10-01 00:00:17.488|red winged blackbird |agelaius phoeniceus |

|POINT (-122.2438 47.8534) |2023-10-01 00:00:18.046|chestnut backed chickadee|poecile rufescens |

|POINT (-122.2438 47.8534) |2023-10-01 00:00:18.046|chestnut backed chickadee|poecile rufescens |

+----------------------------+-----------------------+-------------------------+----------------------+We can visualize a sample of this data using SedonaKepler, the Kepler GL integration for Apache Sedona.

SedonaKepler.create_map(df=bb_df.sample(0.001), name="Bird Species")

Uploading A GeoJSON File Using The Wherobots Cloud File Browser

Next, let’s see how we can upload our own data in Wherobots Cloud using the Wherobots Cloud File Browser UI. Wherobots Cloud accounts include secure file storage in private S3 buckets specific to our user or shared with other users of our organization.

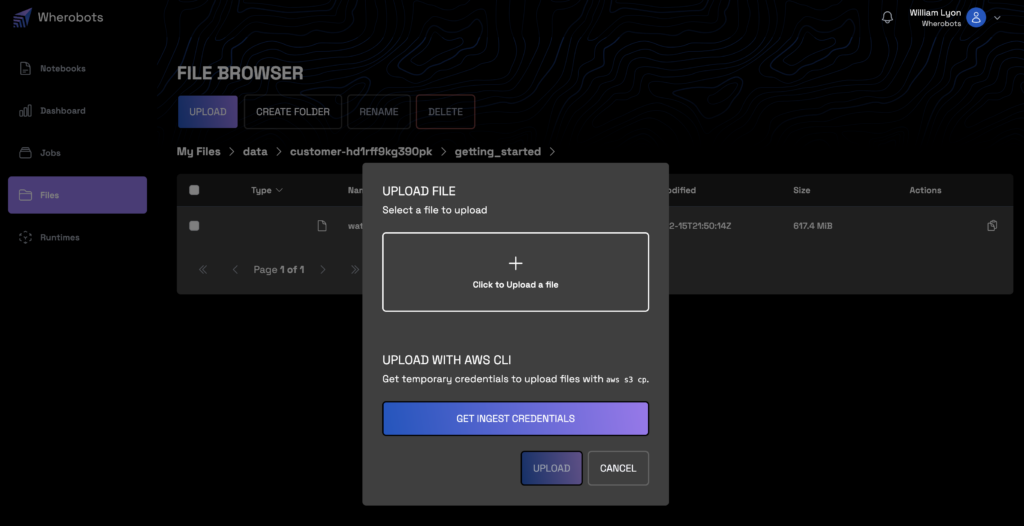

There are two options for uploading our own data into Wherobots Cloud:

- Via the file browser UI in the Wherobots Cloud web application

- Using the AWS CLI by generating temporary ingest credentials

We’ll explore both options, first using the file browser UI. We will upload a GeoJSON file of the US Watershed Boundary Dataset. In the Wherobots Cloud web application, navigate to the “Files” tab then select the “data” directory. Here you’ll see two folders, one with the name customer-XXXXXXXXX and the other shared. The “customer” folder is private to each Wherobots Cloud user while the “shared” folder is private to the users within your Wherobots Cloud organization.

We can upload files using the “Upload” button. Once the file is uploaded we can click on the clipboard icon to the right of the filename to copy the S3 URL for this file to access it in the notebook environment.

We’ll save the S3 URL for this GeoJSON file as a variable to refer to later, note that this is private to my Wherobot’s user and not publicly accessible so if you’re following along you’ll have a different URL

S3_URL_JSON = "s3://wbts-wbc-m97rcg45xi/qjnq6fcbf1/data/customer-hd1rff9kg390pk/getting_started/watershed_boundaries.geojson"WherobotsDB supports native readers for many file types, including GeoJSON so we’ll specify the “geojson” format to import our watersheds data into a Spatial DataFrame. This is a multiline GeoJSON file, where the features are contained in one large single object. WherobotsDB can also handle GeoJSON files with each feature in a single line. Refer to the documentation here for more information about working with GeoJSON files in WherobotsDB.

watershed_df = sedona.read.format("geojson"). \

option("multiLine", "true"). \

load(S3_URL_JSON). \

selectExpr("explode(features) as features"). \

select("features.*")

watershed_df.createOrReplaceTempView("watersheds")

watershed_df.printSchema()root

|-- geometry: geometry (nullable = true)

|-- properties: struct (nullable = true)

| |-- areaacres: double (nullable = true)

| |-- areasqkm: double (nullable = true)

| |-- globalid: string (nullable = true)

| |-- huc6: string (nullable = true)

| |-- loaddate: string (nullable = true)

| |-- metasourceid: string (nullable = true)

| |-- name: string (nullable = true)

| |-- objectid: long (nullable = true)

| |-- referencegnis_ids: string (nullable = true)

| |-- shape_Area: double (nullable = true)

| |-- shape_Length: double (nullable = true)

| |-- sourcedatadesc: string (nullable = true)

| |-- sourcefeatureid: string (nullable = true)

| |-- sourceoriginator: string (nullable = true)

| |-- states: string (nullable = true)

| |-- tnmid: string (nullable = true)

|-- type: string (nullable = true)The WherobotsDB GeoJSON loader will parse GeoJSON exactly as it is stored – as a single object so we’ll want to explode the features column which will give us rows in our DataFrame containing each feature’s geometry and a struct containing the feature’s associated properties.

The geometries stored in GeoJSON are loaded as geometry types so we can operate on the DataFrame without explicitly creating geometries (as we did when loading the CSV file above).

Here we filter for all watersheds that intersect with California and visualize them using SedonaKepler.

california_df = sedona.sql("""

SELECT geometry, properties.name, properties.huc6

FROM watersheds

WHERE properties.states LIKE "%CA%"

""")

SedonaKepler.create_map(df=california_df, name="California Watersheds")

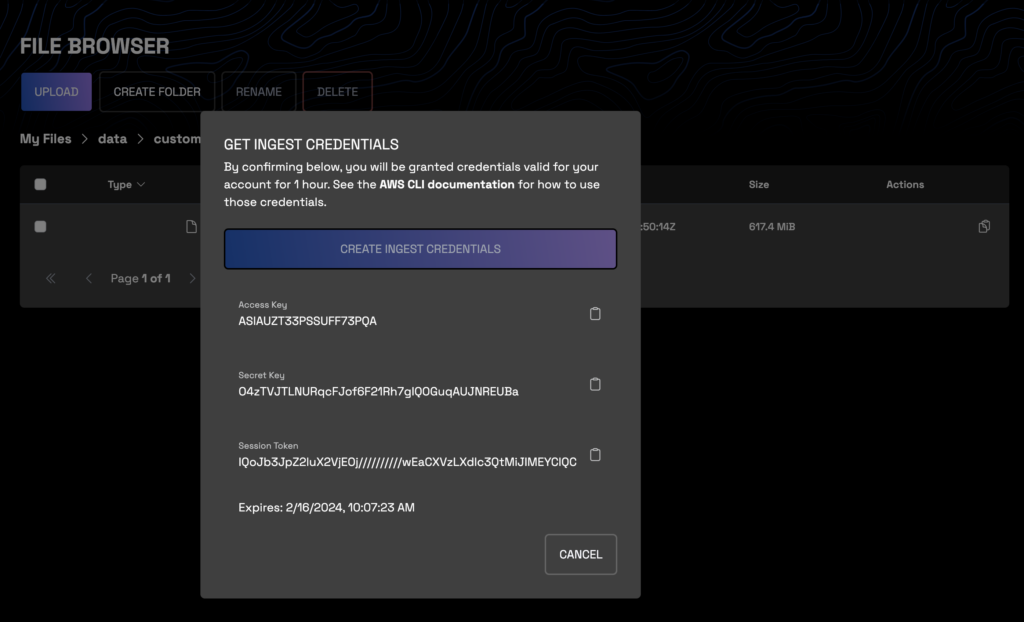

Uploading Files To Wherobots Cloud Using The AWS CLI

If we have large files or many files to upload to Wherobots Cloud, instead of uploading files through the file browser web application we can use the AWS CLI directly by generating temporary ingest credentials. After clicking “Upload” select “Create Ingest Credentials” to create temporary credentials that can be used with the AWS CLI to upload data into your private Wherobots Cloud file storage.

Once we generate our credentials we’ll need to configure the AWS CLI to use these credentials by adding them to a profile in the ~/.aws/credentials file (on Mac/Linux systems) or by running the aws configure command. See this page for more information on working with the AWS CLI.

[default]

aws_access_key_id = ASIAUZT33PSSUFF73PQA

aws_secret_access_key = O4zTVJTLNURqcFJof6F21Rh7gIQOGuqAUJNREUBa

aws_session_token = IQoJb3JpZ2luX2VjEOj//////////wEaCXVzLXdlc3QtMiJIMEYCIQCcn17jQory/9dbWjoq47cnxU4lENEE6S1akq1dEQx+4AIhALuFkR/XZtCOiw/AwEKtbCpj0IjDTR24MzSPxbSVoFOkKrICCMH//////////wEQABoMMzI5ODk4NDkxMDQ1IgwK3vI/VJyUkHJMltAqhgL6dhz0ikL2kpB7fCIKE52sw5NHlmG1LfuQkmxlhWHEJHnvFd1PrYEneDBTyXbt3Mxx8HQ86/k23zePAbm3mdOyVrrd7r9nA+cPYu5Jv93aGf+3brgGd/3fRMJy6y2Lwydsfuj/3u2/c8Ox7pTJKtcJYN14C8f3BNzTqtpR3bDjpTWG2+JMGFjgOx4lf9GuuXhW39tH8qOONA/y2lRiM00/j8cVOu1AZ/R5gRqL2/fCTFdxp9oBKJHXO8RZJ2u7/H67dmgdDFcw4T/ZuIvhEOtZ9TG2Vo9Vqb4jk+tP5E0ZhAnPyjWfhAdXD8at9/4i6S2WGhsywl5fnwBLYjRFRas5nnX7yzEEMLuQvq4GOpwBpyB0/qrzwPeRhHwb/K/ipspU2DMGSL0BFg6DoEpAOct/flMMmRYTaEhWV/Igexr746Hwox7ZOdN1gCLED1+iy8R/xcASNJJ9Yt194ItTvVtT4I6NTF13Oi50t0KqLURP43t1A67YwuZiWm+V7npUyiyHezBYLzTwf/rRi17lgpYO6/NSUSjgFeOqLDob11HGywzW6ifik+y269rqLet’s upload a Shapefile of US Forest Service roads to our Wherobots Cloud file storage and then import it using WherobotsDB. The command to upload our Shapefile data will look like this (your S3 URL will be different). Note that we use the --recursive flag since we we want to upload the multiple files that make up a Shapefile.

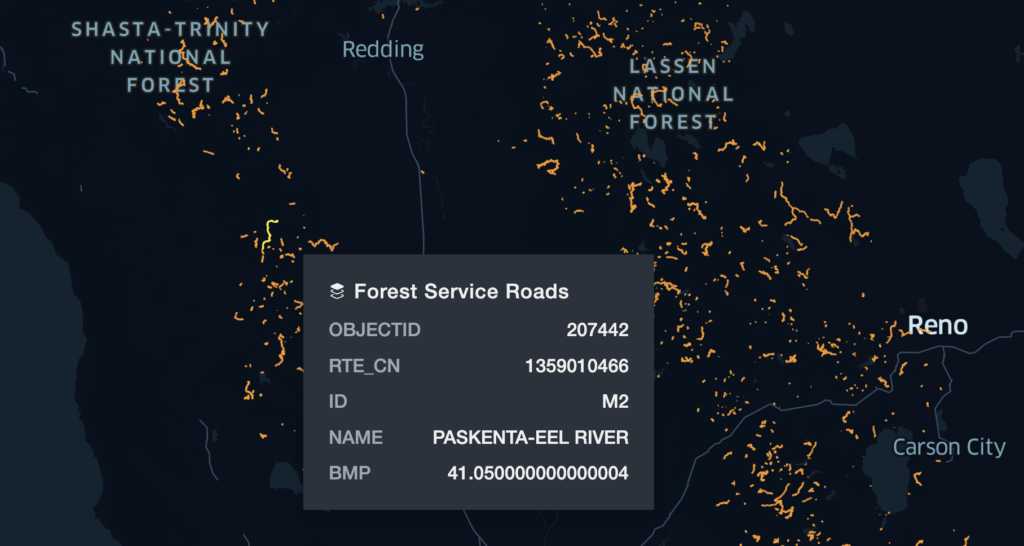

aws s3 cp --recursive roads_shapefile s3://wbts-wbc-m97rcg45xi/qjnq6fcbf1/data/customer-hd1rff9kg390pk/getting_started/roads_shapefileBack in our notebook, we can use WherobotsDB’s Shapefile Reader which will first generate a Spatial RDD from the Shapefile which we can then convert to a Spatial DataFrame. See this page in the documentation for more information about working with Shapefile data in Wherobots.

S3_URL_SHAPEFILE = "s3://wbts-wbc-m97rcg45xi/qjnq6fcbf1/data/customer-hd1rff9kg390pk/getting_started/roads_shapefile"

spatialRDD = ShapefileReader.readToGeometryRDD(sedona, S3_URL_SHAPEFILE)

roads_df = Adapter.toDf(spatialRDD, sedona)

roads_df.printSchema()We can visualize a sample of our forest service roads using SedonaKepler.

SedonaKepler.create_map(df=roads_df.sample(0.1), name="Forest Service Roads")

Working With Raster Data – Loading GeoTiff Raster Files

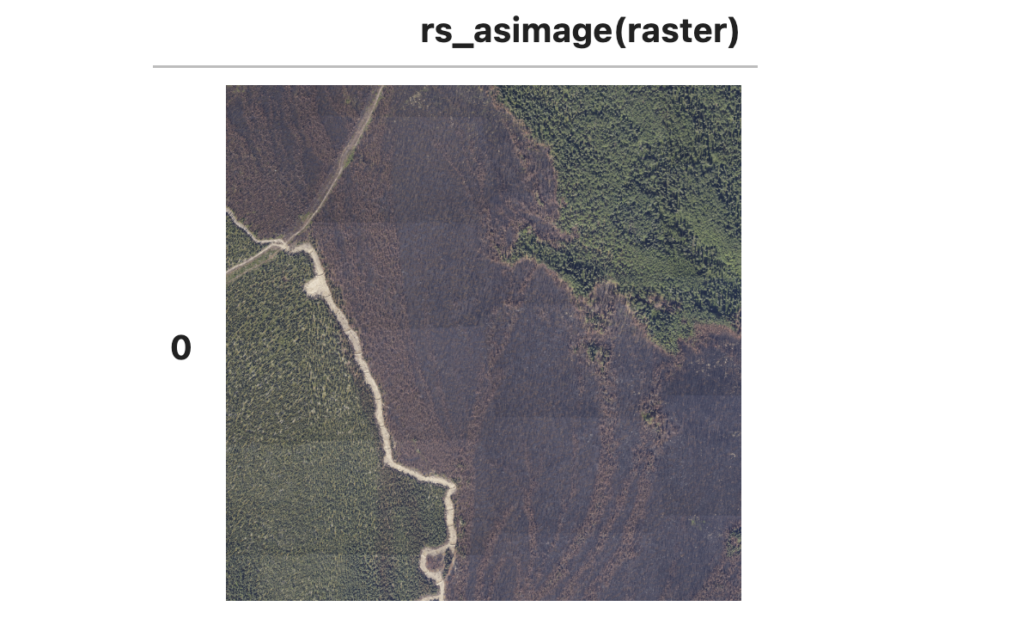

So far we’ve been working with vector data: geometries and their associated properties. We can also work with raster data in Wherobots Cloud. Let’s load a GeoTiff as an out-db raster using the RS_FromPath Spatial SQL function. Once we’ve loaded the raster image we can use Spatial SQL to manipulate and work with the band data of our raster.

ortho_url = "s3://wherobots-examples/data/examples/NEON_ortho.tif"

ortho_df = sedona.sql(f"SELECT RS_FromPath('{ortho_url}') AS raster")

ortho_df.createOrReplaceTempView("ortho")

ortho_df.show(truncate=False)For example we can use the RS_AsImage function to visualize the GeoTiff, in this case an aerial image of a forest and road scene.

htmlDf = sedona.sql("SELECT RS_AsImage(raster) FROM ortho")

SedonaUtils.display_image(htmlDf)

This image has three bands of data: red, green, and blue pixel values.

sedona.sql("SELECT RS_NumBands(raster) FROM ortho").show()

+-------------------+

|rs_numbands(raster)|

+-------------------+

| 3|

+-------------------+We can use the RS_MapAlgebra function to calculate the normalized different greenness index (NDGI), a metric similar to the normalized difference vegetation index (NDVI) used for quantifying the health and density of vegetation and landcover. The RS_MapAlgebra function allows us to execute complex computations using values from one or more bands and also across multiple rasters if for example we had a sequence of images across time and were interested in change detection.

ndgi_df = sedona.sql("""

SELECT RS_MapAlgebra(raster, 'D', 'out = (rast[1] - rast[0]) / (rast[1] + rast[0]);')

AS ndgi

FROM ortho

""")Writing Files With Wherobots Cloud

A common workflow with Wherobots Cloud is to load several files, perform some geospatial analysis and save the results as part of a larger data pipeline, often using GeoParquet. GeoParquet is a cloud-native file format that enables efficient data storage and retrieval of geospatial data.

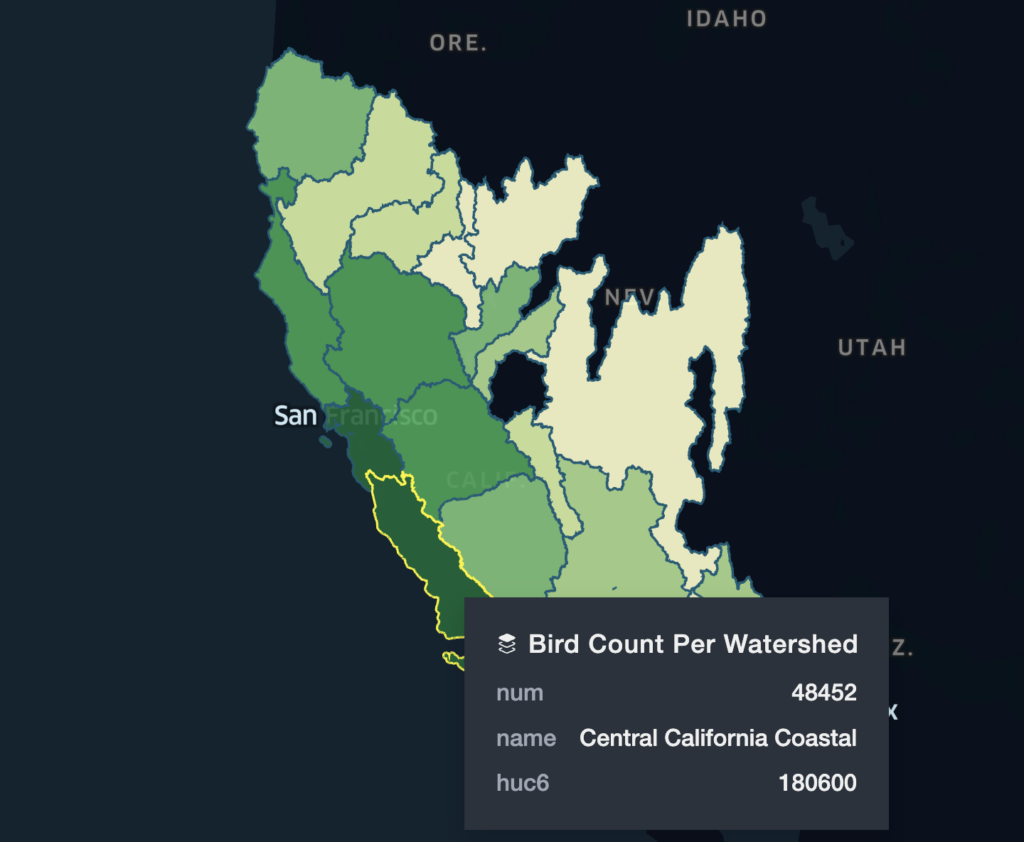

Let’s perform some geospatial analysis using the data we loaded above and save the results as GeoParquet to our Wherobots Cloud S3 bucket. We’ll perform a spatial join of our bird observations, joining with the boundaries of our watersheds and then with a GROUP BY calculating the number of bird observations in each watershed.

birdshed_df = sedona.sql("""

SELECT

COUNT(*) AS num,

any_value(watersheds.geometry) AS geometry,

any_value(watersheds.properties.name) AS name,

any_value(watersheds.properties.huc6) AS huc6

FROM bb, watersheds

WHERE ST_Contains(watersheds.geometry, bb.location)

AND watersheds.properties.states LIKE "%CA%"

GROUP BY watersheds.properties.huc6

ORDER BY num DESC

""")

birdshed_df.show()Here we can see the watersheds with the highest number of bird observations in our dataset.

+------+--------------------+--------------------+------+

| num| geometry| name| huc6|

+------+--------------------+--------------------+------+

|138414|MULTIPOLYGON (((-...| San Francisco Bay|180500|

| 75006|MULTIPOLYGON (((-...|Ventura-San Gabri...|180701|

| 74254|MULTIPOLYGON (((-...|Laguna-San Diego ...|180703|

| 48452|MULTIPOLYGON (((-...|Central Californi...|180600|

| 33842|MULTIPOLYGON (((-...| Lower Sacramento|180201|

| 20476|MULTIPOLYGON (((-...| Santa Ana|180702|

| 17014|MULTIPOLYGON (((-...| San Joaquin|180400|

| 15288|MULTIPOLYGON (((-...|Northern Californ...|180101|

| 13636|MULTIPOLYGON (((-...| Truckee|160501|

| 9964|MULTIPOLYGON (((-...|Southern Oregon C...|171003|

| 6864|MULTIPOLYGON (((-...|Tulare-Buena Vist...|180300|

| 5120|MULTIPOLYGON (((-...| Northern Mojave|180902|

| 3660|MULTIPOLYGON (((-...| Salton Sea|181002|

| 2362|MULTIPOLYGON (((-...| Carson|160502|

| 1040|MULTIPOLYGON (((-...| Lower Colorado|150301|

| 814|MULTIPOLYGON (((-...| Mono-Owens Lakes|180901|

| 584|MULTIPOLYGON (((-...| Klamath|180102|

| 516|MULTIPOLYGON (((-...| Upper Sacramento|180200|

| 456|MULTIPOLYGON (((-...| North Lahontan|180800|

| 436|MULTIPOLYGON (((-...|Central Nevada De...|160600|

+------+--------------------+--------------------+------+

only showing top 20 rowsWe can also visualize this data as a choropleth using SedonaKepler.

The GeoParquet data we’ll save will include the geometry of each watershed boundary, the count of bird observations, and the name and id of each watershed. We’ll save this GeoParquet file to our Wherobots private S3 bucket. Previously we accessed our S3 URL via the Wherobots File Browser UI, but we can also access this URI as an environment variable in the notebook environment.

USER_S3_PATH = os.environ.get("USER_S3_PATH")Since this file isn’t very large we’ll save as a single partition, but typically we would want to save partitioned GeoParquet files partitioned on a geospatial index or administrative boundary. The WherobotsDB GeoParquet writer will add the geospatial metadata when saving as GeoParquet. See this page in the documentation for more information about creating GeoParquet files with Sedona.

birdshed_df.repartition(1).write.mode("overwrite"). \

format("geoparquet"). \

save(USER_S3_PATH + "geoparquet/birdshed.parquet")That was a look at working with files in Wherobots Cloud. You can get started with large-scale geospatial analytics by creating a free account at cloud.wherobots.com. Please join the Wherobots & Apache Sedona Community site and let us know what you’re working on with Wherobots, what type of examples you’d like to see next, or if you have any feedback!

Sign in to Wherobots Cloud to get started today.

Want to keep up with the latest developer news from the Wherobots and Apache Sedona community? Sign up for the This Month In Wherobots Newsletter:

Contributors

-

William Lyon

Developer Relations At Wherobots