Unlocking the Spatial Frontier: The Evolution and Potential of spatial technology in Apple Vision Pro and Augmented Reality Apps

The evolution of Augmented Reality (AR) from the realm of science fiction to tangible, practical applications like Augmented Driving, Pokemon Go, and Meta Quest marked a significant shift in how we interact with technology and perceive our surroundings. The recent introduction of Apple Vision Pro underscores this transition, bringing AR closer to mainstream adoption. While […]

TABLE OF CONTENTS

The evolution of Augmented Reality (AR) from the realm of science fiction to tangible, practical applications like Augmented Driving, Pokemon Go, and Meta Quest marked a significant shift in how we interact with technology and perceive our surroundings. The recent introduction of Apple Vision Pro underscores this transition, bringing AR closer to mainstream adoption. While the ultimate fate of devices like Apple Vision Pro or Meta Quest remains uncertain, their technological capabilities are undeniably impressive.

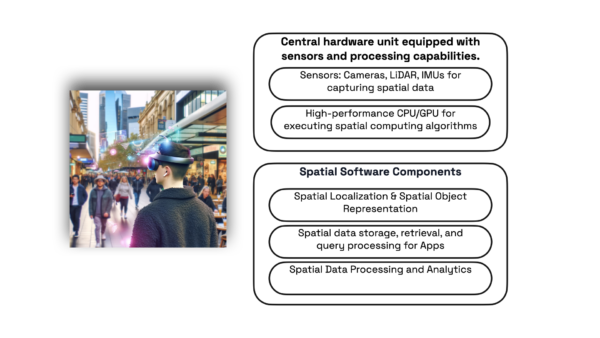

One of the key components of Apple Vision Pro is what Apple refers to as "Spatial Computing." While the term itself isn’t novel, with decades of research exploring the utilization of spatial context in computing, Apple’s interpretation focuses primarily on integrating spatial environments into virtual computing environments and vice versa. This approach builds upon established research in spatial object localization, representation, and spatial query processing. Moreover, it opens doors to leveraging spatial analytics, potentially offering insights and functionalities previously unimaginable.

Despite its roots in earlier research and literature like "Spatial Computing" by Shashi Shekhar and Pamela Vold, Apple’s redefinition underscores a shift in focus towards immersive spatial experiences within computing environments. By leveraging advancements in technology and innovative approaches, Apple and other companies are pushing the boundaries of what’s possible in AR, paving the way for exciting new applications and experiences. This article highlights the technical challenged Apple had to overcome to achieve such milestone and also lay the groundwork for future improvements.

Spatial object localization and Presentation in Apple Vision Pro

Devices like Apple Vision Pro, to work properly, had to first solve challenges in object localization, requiring systems to not only determine the user’s location but also locate objects within the camera’s line of sight. Existing outdoor and indoor localization technologies provide a foundation, but traditional methods face limitations in augmented reality contexts. Apple Vision Pro solved challenges such as varying object positions due to camera angle and real-time localization for moving objects. It also did a great job integrating advanced technologies including image processing, artificial intelligence, and deep learning. Promising research directions involve leveraging semantic embeddings, depth cameras, and trajectory-based map matching algorithms to make sure those devices are usable in outdoor environments. By combining these approaches, the aim is to achieve real-time, high-accuracy object localization across different environments while minimizing device power consumption.

The apple vision pro does a fantastic job presenting virtual data alongside real-world objects captured by the device’s camera. Unlike traditional user interfaces, augmented reality interfaces must carefully integrate augmented reality data to avoid distorting the user’s view and causing potential safety hazards (while driving or crossing the streets). Apple vision pro still does not completely solve the problem, but there is room for improvement. I believe a big next step for those devices to succeed is to address the challenge of maintaining visual clarity and relevance of augmented data, as well as opportunities to draw from existing research in virtual reality applications and location-aware recommendation techniques. For example, one direction may explore the potential of presenting augmented reality spatial objects as audio messages to users. This alternative modality offers advantages in scenarios where visual attention is already heavily taxed, such as driving. However, an essential aspect of this approach is the ranking of augmented spatial objects to determine their size and prominence, ensuring optimal user engagement while minimizing distractions.

The role of spatial query processing in Apple Vision Pro

Similar to the iPhone, Apple Vision Pro also comes equipped with a range of apps designed to leverage its capabilities. These apps utilize the mixed reality environment by issuing queries to retrieve spatial objects and presenting them within the immersive experience facilitated by Vision Pro. For example, a navigation app using Apple Vision Pro might issue queries to fetch spatial objects such as points of interest, landmarks, or navigation markers. These objects would then be presented within the user’s field of view, overlaying relevant information onto the physical world through the device’s display. Similarly, an education app could retrieve spatial objects representing interactive learning materials or virtual models, enriching the user’s understanding of their surroundings.

To achieve this, the apps would communicate with the mixed reality environment, likely through APIs or SDKs provided by Apple’s developer tools. These interfaces would allow the apps to issue spatial queries to the environment, specifying parameters such as location, distance, and relevance criteria. The mixed reality environment would then return relevant spatial objects based on these queries, which the apps can seamlessly integrate into the user’s immersive experience. By leveraging the capabilities of Apple Vision Pro and interacting with the mixed reality environment, these apps can provide users with rich, context-aware experiences that enhance their understanding and interaction with the world around them. Whether for navigation, education, gaming, or other purposes, the ability to issue queries and retrieve spatial objects is fundamental to unlocking the full potential of Vision Pro’s immersive capabilities.

However, the classic rectangular or circular range query processing techniques may need to be redefined to accommodate the camera range and line of sight. While the camera view can still be formulated using a rectangular range query, this approach may not be very efficient, as not every spatial object within the camera range needs to be retrieved. This inefficiency arises because the more augmented spatial objects stitched to the camera scene, the more distorted the user’s view of the physical world becomes. Furthermore, as the camera’s line of sight changes, the system issues a new range query to the database. This may hinder the real-time constraint imposed by Apple Vision Pro applications.

To optimize the performance of Apple Vision Pro applications, it’s essential to redefine the spatial range query to accurately account for the camera range and line of sight. This could involve implementing algorithms that dynamically adjust the spatial query based on the camera’s current view and line of sight. By doing so, only the relevant augmented spatial objects within the camera’s field of view need to be retrieved, minimizing distortion and ensuring real-time performance for Apple Vision Pro applications.

The role of spatial data analytics in Apple Vision Pro

With the proliferation of applications for the Apple Vision Pro, there will be a surge in the accumulation of spatial data by these apps. This data will encapsulate users’ engagements within both the physical and virtual environments. By processing and analyzing this data, a deeper comprehension of user behavior can be attained, thereby facilitating the optimization of applications to better serve their user base. For instance, consider an apple vision pro app for sightseeing. Here’s how the spatial analytics process might work:

- Data Collection: The site-seeing app collects spatial data from users as they navigate through the city using Apple Vision Pro. This data could include GPS coordinates, timestamps, images, and possibly other contextual information.

- Data Processing: The collected spatial data is processed to extract relevant information such as user trajectories, points of interest visited, time spent at each location, and any interactions within the virtual environment overlaid on the physical world.

- Analysis: Once the data is processed, various analytical techniques can be applied to gain insights. This might involve clustering similar user trajectories to identify popular routes, analyzing dwell times to determine the most engaging attractions, or detecting patterns in user interactions within virtual environments.

- Insights Generation: Based on the analysis, insights are generated about user behavior and preferences. For example, the app developers might discover that a certain landmark is highly popular among users, or that users tend to spend more time in areas with interactive virtual elements.

- Application Enhancement: Finally, these insights are used to enhance the site-seeing app. This could involve improving recommendations to users based on their preferences and behavior, optimizing the layout of virtual overlays to increase engagement, or developing new features to better cater to user needs.

By continuously collecting, processing, and analyzing spatial data, the site-seeing app can iteratively improve and evolve, ultimately providing a more personalized and engaging experience for its users. Additionally, users may benefit from discovering new attractions and experiences tailored to their interests, while also contributing to the collective knowledge base that fuels these improvements.

A hiking app on Apple Vision pro could collect spatial data representing users’ interactions with the physical environment while hiking, such as the trails they take, points of interest they stop at, and the duration of their hikes. Additionally, it could also capture interactions with virtual elements overlaid on the real-world environment, such as augmented reality trail markers or informational overlays.

By processing and analyzing this data, the hiking app can gain valuable insights into user behavior. For example, it could identify popular hiking routes, points of interest along those routes, and common user preferences or patterns. This information can then be used to improve the app’s functionality and tailor it to better serve its user base.

For instance, the app could suggest personalized hiking routes based on a user’s past behavior and preferences. It could also provide real-time notifications about points of interest or hazards along the trail, based on data collected from previous users’ interactions. Additionally, the app could use machine learning algorithms to predict future user behavior and offer proactive suggestions or recommendations.

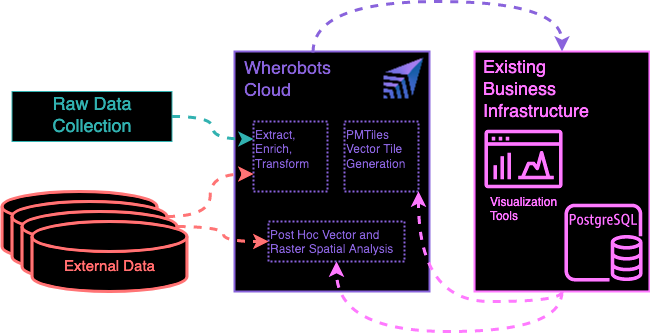

To enable apps to leverage spatial analytics effectively, they require a scalable and user-friendly spatial data analytics platform. This platform should be capable of handling massive and intricate spatial data collected from AR devices, allowing users to execute spatial analytics queries efficiently without the need to optimize compute resources for such workloads. This aligns perfectly with our mission at Wherobots. We envision every Apple Vision Pro app utilizing Wherobots as their all-in-one cloud platform for running spatial data processing and analytics tasks.. By fully leveraging spatial analytics, Apple vision pro and its app ecosystem could unlock a host of new possibilities for augmented reality experiences:

- Personalized Recommendations: Spatial analytics could enable Apple Vision Pro to analyze users’ past interactions and preferences to offer highly personalized recommendations. For example, the device could suggest nearby attractions based on a user’s interests or recommend routes tailored to their preferences.

- Predictive Capabilities: By analyzing spatial data in real-time, Apple Vision Pro could anticipate users’ needs and actions, providing proactive assistance and guidance. For instance, the device could predict congestion or obstacles along a chosen route and suggest alternative paths to optimize the user’s journey.

- Enhanced Immersion: Spatial analytics could enrich augmented reality experiences by dynamically adapting virtual content based on the user’s environment and behavior. This could include adjusting the placement of virtual objects to align with real-world features or modifying virtual interactions to better suit the user’s context.

- Insightful Analytics: Spatial analytics could provide valuable insights into user behavior and spatial patterns, enabling developers to optimize their applications and experiences. For example, developers could analyze heatmaps of user activity to identify popular areas or assess the effectiveness of virtual overlays in guiding users.

- Advanced Navigation: Spatial analytics could power advanced navigation features, such as indoor positioning and navigation assistance. Apple Vision Pro could leverage spatial data to provide precise directions within complex indoor environments, helping users navigate malls, airports, and other large venues with ease.

By harnessing the power of spatial analytics, Apple Vision Pro has the potential to redefine how we interact with augmented reality and transform numerous industries, from retail and tourism to education and healthcare. As the technology continues to evolve, we can expect even more innovative applications and experiences to emerge, further blurring the lines between the physical and virtual worlds.

To wrap up:

Overall, Apple Vision Pro represents a significant advancement in the field of spatial computing, leveraging decades of research and development to seamlessly integrate virtual and physical environments. As the technology continues to evolve and mature, it holds the promise of revolutionizing various industries and everyday experiences, from gaming and entertainment to navigation and productivity. We will also see advancements in GPUs (Graphics Processing Units) play a crucial role in running spatial computing / AI tasks efficiently with reduced energy consumption. While Apple Vision Pro has yet to fully leverage spatial analytics, it holds significant potential for analyzing spatial data collected during user interactions. Spatial analytics involves extracting meaningful insights and patterns from spatial data, such as user trajectories, spatial relationships between objects, and spatial distributions of activity. By applying spatial analytics, Apple could further enhance the functionality and intelligence of its augmented reality experiences, enabling personalized recommendations, predictive capabilities, and more immersive interactions.